2018-02-19: The half-size data is currently unavailable.

The data set is available for download, at the bottom of this page. The size of the total data set is very large, however. If you have problems downloading the data, please contact us.

If you use this data set please cite the article:

Henrik Aanæs, Anders Lindbjerg Dahl, and Kim Steenstrup Pedersen (2012): “Interesting Interest Points”. International Journal of Computer Vision, June, 2012.

pdf bibtex

Purpose of the data set

This page provide the robot data set and the associated documentation. The reason for providing the data is to have a precise ground truth for benchmarking computer vision methods. The data set consists of 60 scenes acquired from 119 positions, and from each position the scene is illuminated by 19 white LED’s. In total this is 135.660 color images of a resolution of 1200 x 1600. We have mounted the camera on an industrial robot, which gives us very precise camera positioning. The surface of the scene has been scanned using a structured light scanner, which gives us precise 3D surface information. The use of 19 point light sources gives us the possibility of artificially relighting the scene by a linear combination of these 19 images.

This data provides the possibility of very accurately investigating the performance of computer vision methods, for example regarding invariance properties as a function of change in scale, light, or viewing position. The data has initially been used for performance evaluation of a number of existing interest point detectors and descriptors, providing new and surprising insight, see IJCV paper. Our hope is that this data can be an element in developing future computer vision methods.

The 60 data sets provided here is the first comprehensive data set acquired with our unique robot acquisition devise. Our plan is to develop this further, so we very much welcome input to improve the quality of the data and to give ideas for new data sets. The full data set can be downloaded below. The total amount of images is 730 Gb of data. There are also reduced versions available.

Data

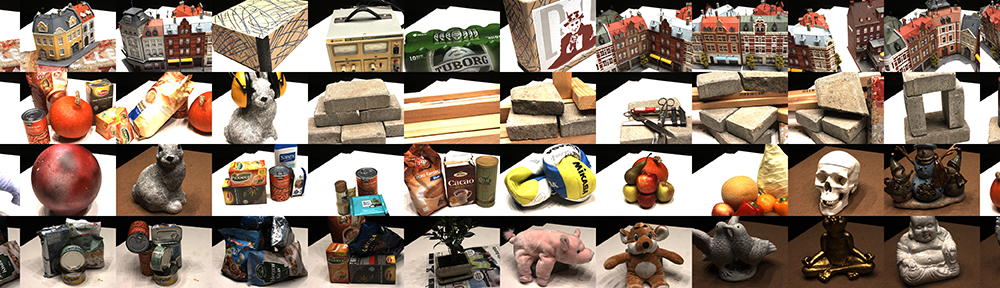

There are 60 scenes that are chosen to cover a wide range of scene type. This is done to reflect realistic variation in the data, so the methods tested on the data can handle this variation.

In each scene we have 119 camera positions and the calibration data is available from the calibration file. This file is obtained from the Camera Calibration Toolbox for Matlab. All files available here have been rectified for lens distortion, but information about camera position is available from the calibration file.

In each position we have acquired images with 19 illuminations. Each illumination is obtained using LED’s placed above the scene, and this can be used for scene relighting.

We provide scene surface data obtained from a structured light scan of each scene. The data is available as point sets and the 3D coordinates are in the coordinate system of the calibration data. Image number 25 is planned to be the reference image, so the surface reconstruction is made to cover most of image 25. An example of experiments are shown in our IJCV paper. Remember to scale the camera matrix when using scaled versions of the original 1200 x 1600 pixel images (the ‘cc’ and ‘fc’ parameters in the ‘Calib_Results.mat’ file).

Download Data

The images of the data set is split into part, as to keep some upper limit to the file sizes. They are however still very large, with the natural implications for the download time. In recognition of this dataset being rather large, we also provide half resolution images, which reduces the memory size

Full resolution images (1200×1600)

- Scene 1-6 (50 Gb)

- Scene 7-12 (52 Gb)

- Scene 13-18 (44 Gb)

- Scene 19-24 (40 Gb)

- Scene 25-30 (47 Gb)

- Scene 31-36 (46 Gb)

- Scene 37-42 (39 Gb)

- Scene 43-48 (48 Gb)

- Scene 49-54 (47 Gb)

- Scene 55-60 (43 Gb)

Half size image (600×800)

- Scene 1-6 (13 Gb)

- Scene 7-12 (13 Gb)

- Scene 13-18 (11 Gb)

- Scene 19-24 (9.9 Gb)

- Scene 25-30 (12 Gb)

- Scene 31-36 (12 Gb)

- Scene 37-42 (9.6 Gb)

- Scene 43-48 (12 Gb)

- Scene 49-54 (12 Gb)

- Scene 55-60 (11 Gb)

3D reconstructions and calibration

Accompanying Code for Evaluation

The following code is in essence the code we used to do the evaluations in the papers associated with this data set. This should as such help on how to use the data set, and give clues to how it is organized (NB: there is a ReadMe file)

Evaluation code (with reconstructions – 1.3 Gb)

Additional documentation is found in this technical report.

Also Anders Boesen Lindbo Larsen, has integrated this data set into the vlbenchmark framework, see here http://github.com/andersbll/vlbenchmarks