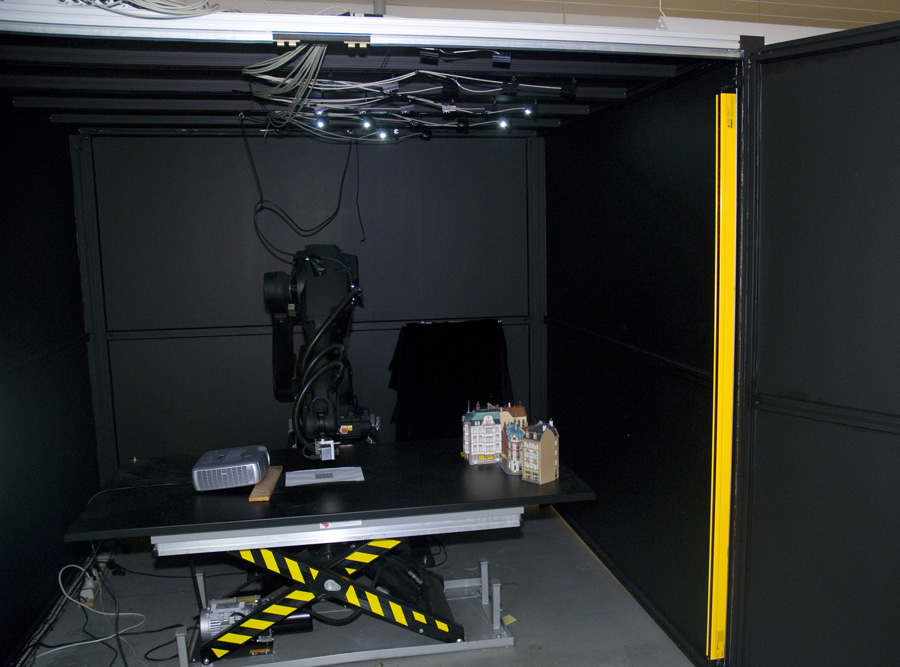

This homepage is an entry point to the data sets generated with our experimental setup shown in one of its configurations in the image below. This setup consists of an industrial ABB robot encaged in a black box to combat light pollution (doors are closed during operations)

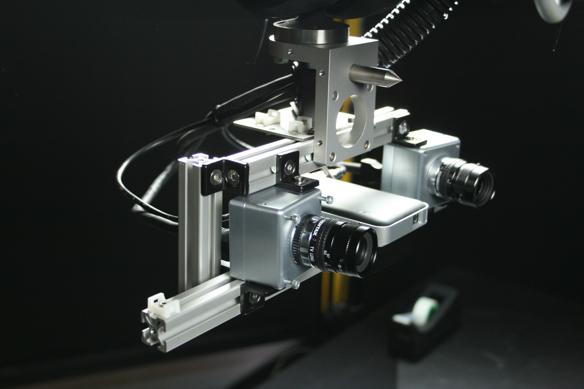

The general idea behind this setup is, that we can control the camera position and light (note the LED’s in the box’s ceiling) from a computer, whereby we can make large amounts of high quality data. In addition to controlling the light and the camera, we have until now also included a structured light scanner, which allows for capturing a reference 3D surface geometry of the viewed scene/object. This is particularly relevant when evaluating image matching, since the optical flow or image correspondences, can be determined from known camera and scene geometry – which we thus provide.

The structured light stereo head, we mounted on the robot arm, when compiling our multiple view stereo data set. This head allowed us to have a structured light scan from every position an image of the data set was take from.

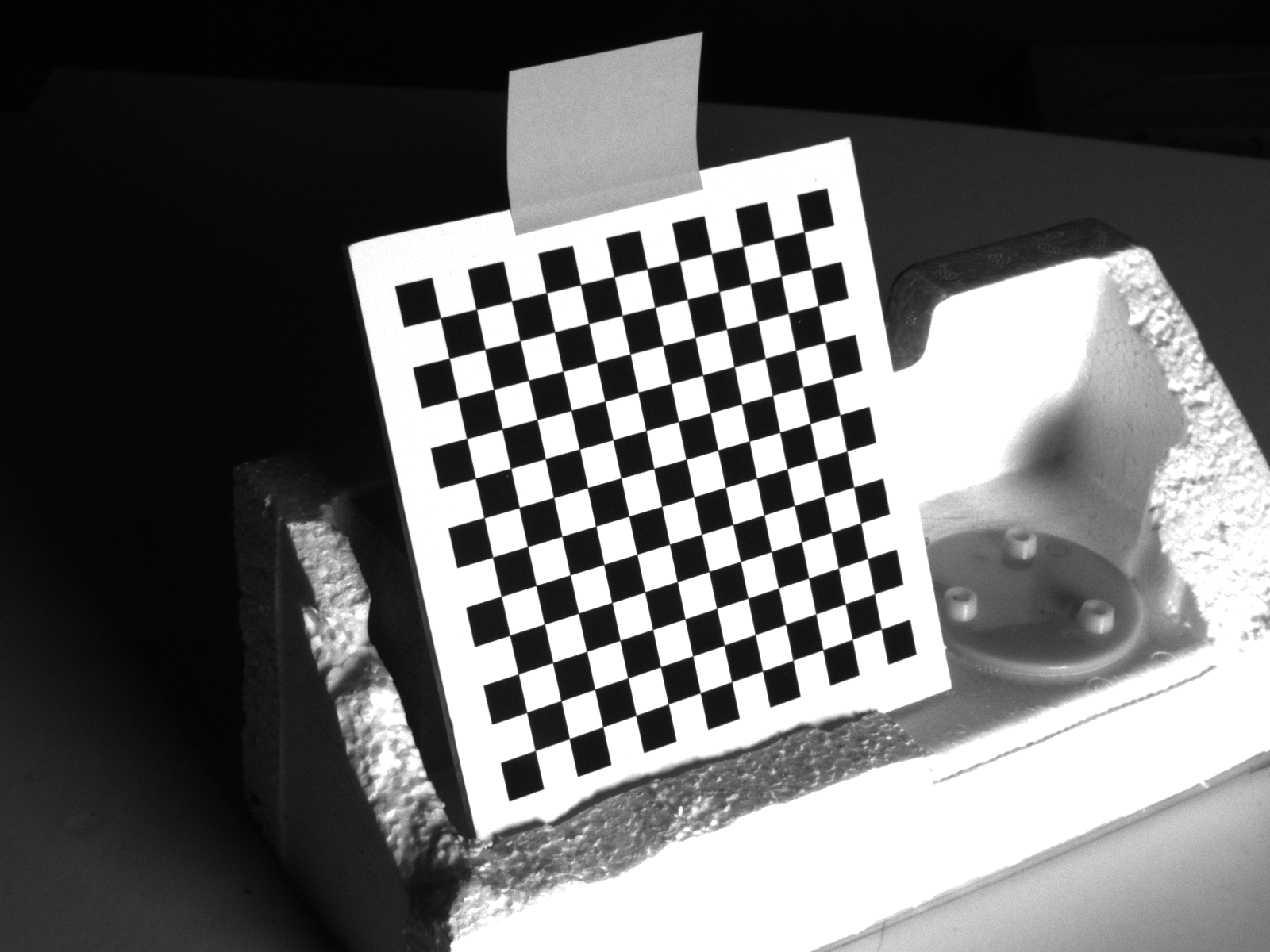

Calibration

While the positioning accuracy of our robot is difficult to control, the repeatability is very high with a very little stochastic part. This implies that running a given positioning script several times, the positioning will be (almost) identical every time.

To address this positioning issue, we do not directly use (or report) the camera positions sent to the robot, but instead determine and report the relative camera positions we get. This is done via the Camera Calibration Toolbox for MatLab.

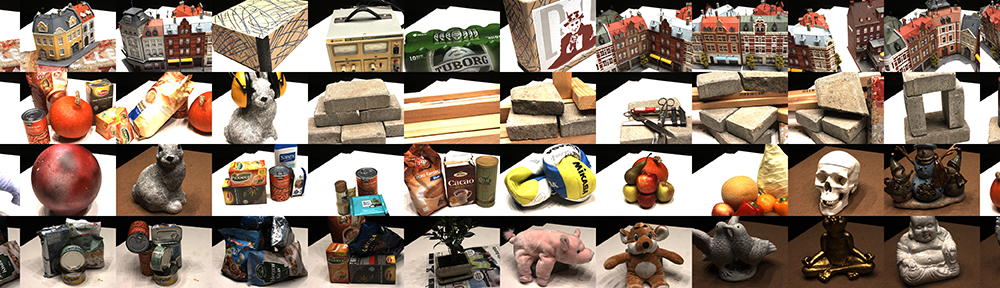

Available data sets

At present we have two available data sets:

- One aimed at evaluating point features, made in 2010 with a journal publication appearing in 2012.

- One aimed at evaluating multiple view stereo, made in 2013 and published in 2014.

These data sets are described further in our published papers, and are available freely as citeware, i.e. if you use them you cite the related work.

We are currently working on more data sets and plan to publish them here, when they are done and we have gotten a describing publication accepted – such that we can get the necessary academic credit.

People

Many people have been involved in the making and processing of these data sets, however, the two primary responsible are Henrik Aanæs and Anders Dahl, both associate professors at the section for Image Analysis and Computer Graphics at the Technical University of Denmark (DTU).